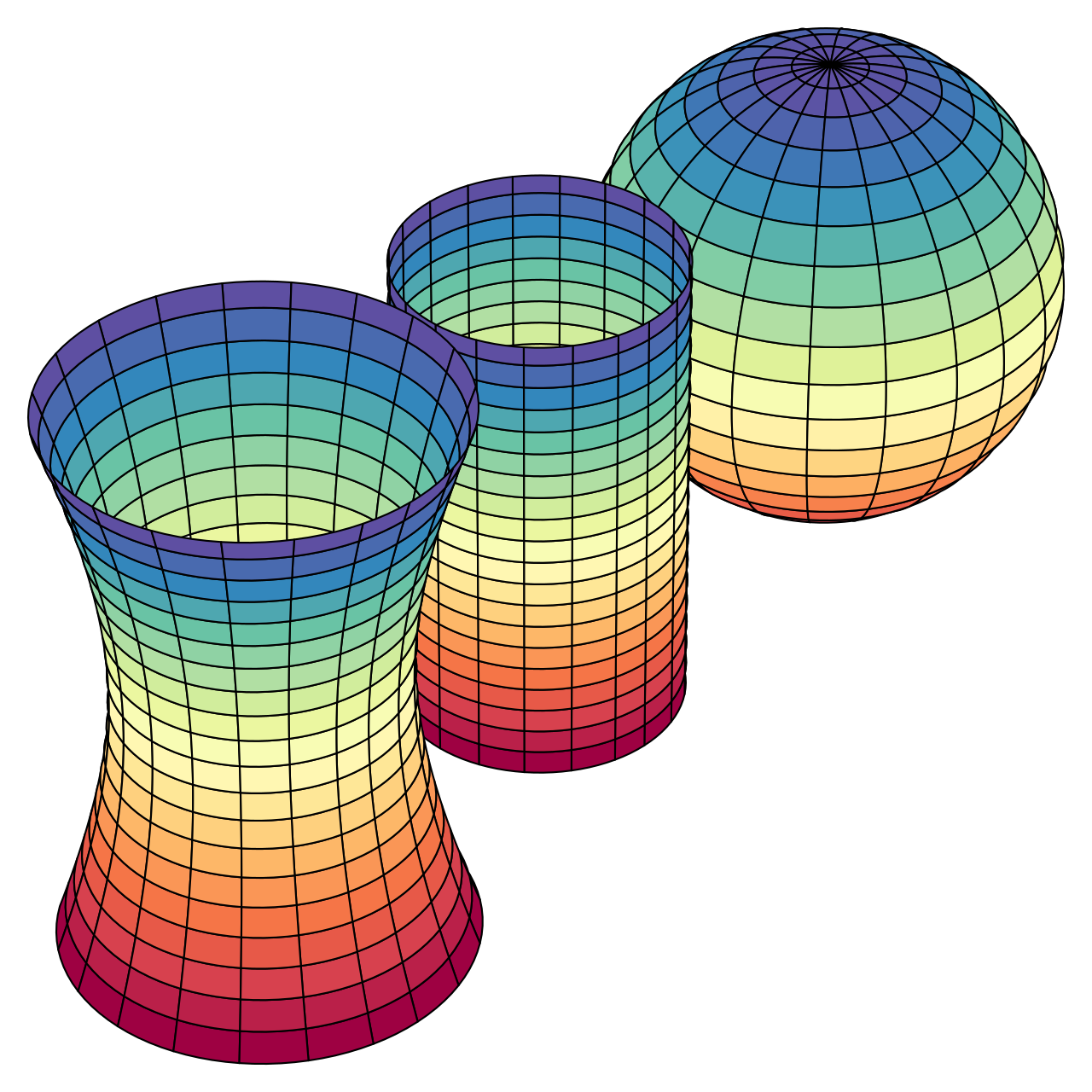

Figure: Dual Tomographic Compression

Performance, Süzen, 2025.

Preamble

Performance, Süzen, 2025.

Randomness is elusive and its probably one of the outstanding concepts for human scientific endeavour, along with gravity. Kolmogorov complexity, appears to be so novel in trying to answering "what is randomness?". The idea that the length of the smallest model that can generate the random sequence determines its complexity was a turning point in history of science. Similarly, it implies choosing the simplest model for explaining a phenomenon. That's why Kolmogorov's work was also supported by the ideas of Solomonoff and Chaitin. A recent work, explores this algorithmic information from compression perspective with Gibbs entropy.

A strange tale of path from applied research to fundamental proposition.

During study of model compression algorithm development, I have noticed an amazing behaviour that information, entropy and compression over compression process have a more in depth.

New concepts in compression and randomness via train-compress cycles

Here, we explain the new concepts for both deep learning model compression and on the interplay between compression and algorithmic randomness.

Inverse compressed sensing (iCS): Normally CS procedure is applied to reconstruct an unknown signal with fewer measurements. In the case of deep learning train-compress, weights are known at one point in the training cycle. If we create hypothetical measurements, using CS formulations, we can reconstruct weights sparse projection.

Dual Tomographic Compression: Applying iCS for the input and output of neuronal level, layer-wise, simultaneously.

Weight rays: An output reconstructed vector out of DTC; weights given sparsity level, though they are not generated in isolation but within train-compress cycle.

Gibbs randomness proposition : An extension of Kolmogorov complexity for a compression process. That, directed randomness is the same as complexity reduction, i.e., compression.

Conclusion

A new technique called DTC can be used to train deep learning with model compression on the fly. This gives rise to massively energy efficient deep learning, reaching almost ~98% reduction in energy use. Moreover, the technique also demonstrated an extended version of Kolmogorov complexity.

Further reading

Paper & codes are released :

- Paper: Gibbs randomness-compression proposition: An efficient deep learning

arXiv:2505.23869 (2025) - Codes: https://github.com/msuzen/research/tree/main/gibbs-randomness-compression

Cite as