|

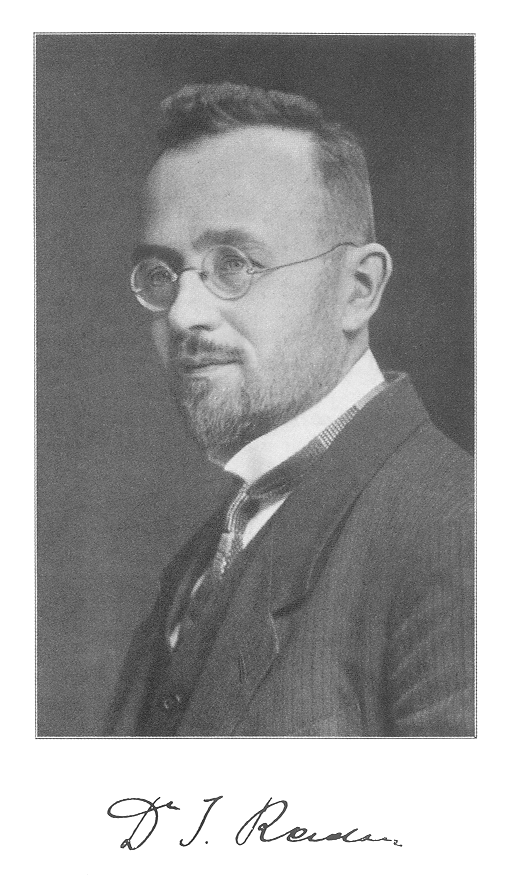

| Radon, founder of inverse problems (Wikipedia) |

This is a very well known concept in geophysics to image reconstruction communities many decades. Underspecification stems from Hadamard's definition of well-posed problem. It isn't a new problem. If you do a research on underspecification for machine learning, please do make sure that relevant literature on ill-posed problems are studied well before making strong statements. It would be helpful and prevent the reinvention of the wheel. One technique everyone aware of is L2 regularisation, this is to reduce ill-possedness of machine learning models. In the context of how come a deployed model's performance degrade over time, ill-possedness play a role but it isn't the sole reason. There is a large literature on inverse problems dedicated to solve these issues, and if underspecification was the sole issue for deployed machine learning systems degrading over time: we would have reduced the performance degradation by applying strong L1-regularisations to reduce "the feature selection bias", hence the lower the effect of underspecification. Specially in deep learning models, underspecification shouldn't be an issue, due to representation learning deep learning models bring naturally, given the inputs covers the basic learning space.