Optimal learning : Meta-optimization

Many papers directly equate “machine” learning problem, algorithmic learning oppose to human or animal learning, with optimisation problem. Unfortunately, contrary to common belief machine learning is not an optimisation problem. For example, take optimal learning strategy, a replace learning with optimisation and we end up having and absurd terms of optimal optimisation strategy at one point.

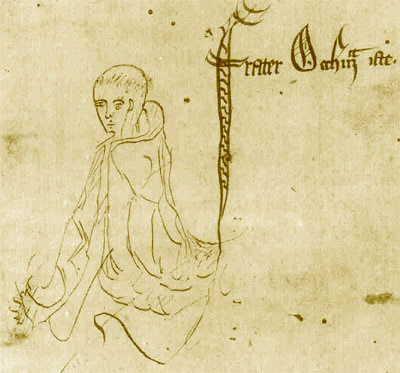

|

| Turing machine (Wikipedia) |

Computable functions to learning

Fundamentally, we do not know how human learning can be mapped into an algorithm or if there are computable function analogs of human learning or if human intelligence and its artificial analog can be represented as Turing computable manner.