|

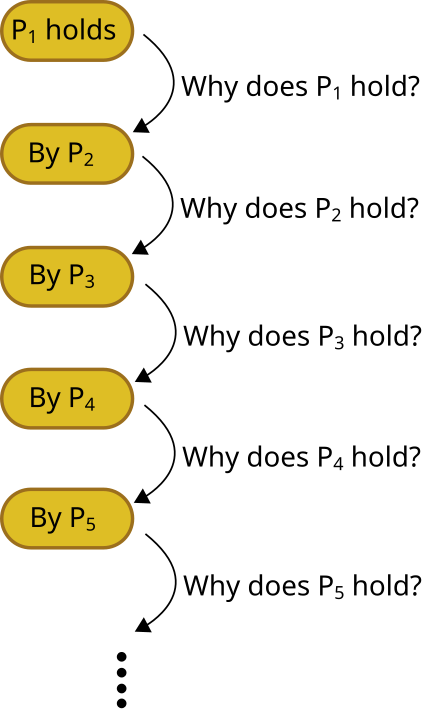

| Figure: Infinite Regress (Wikipedia) |

Preamble

Surge in usage of artificial intelligence (AI) systems, now a standard practice for mid to large scale industries. These systems can not reason by construction and the legal requirements dictates if a machine learning/AI model made a decision, such as granting a loan or not for example, people affected by this decision has right to know the reason. However, it is well known that machine learning models can not reason or provide a reasoning out of box. Apart from modifying conventional machine learning systems that includes some form of reasoning as a research exercise, practicing or building so called explainable or interpretable machine learning solutions are very popular on top of conventional models. Though there is no accepted definition of what should entail an explanation of the machine learning systems, but in general, this field of study is called explainable artificial intelligence.

One of the most used or popularised set of techniques essentially build a secondary model on top of the primary model's behaviour and try to come up with a story on how the primary model, AI system, brought up its answers. However, this approach sounds like a good solution at the first glance, it actually trapped us into an infinite regress, a dilemma: Who is going to explain the explainer?

Avoiding 'Who is going to explain the explainer?' dilemma

Resolution of this lies in completely avoiding explainer models or techniques rely on optimisations of a similar sort. We should rely on solely so called counterfactual generators. These generators rely on a repetitive query to the system to generate data on the behaviour of the AI system to answer what if scenarios or a set of what if scenarios, corresponding to a set of reasoning statements.

What are counterfactual generators?

|

| Figure: Counterfactual generator, instance based. |

.

Outlook

We rule out of using secondary machine learning models or any models, including simple linear regression, in building an explanation for machine learning system. Instead we claim that reasoning can be achieved a simplest level with counterfactual generators based on systems behaviour to different query sets. This seems to be a good direction, as reasoning can be defined as "algebraically manipulating previously acquired knowledge in order to answer a new question" by Léon Botton [ Botton ] and of course partly inline with Judea Pearl's causal inference revolution, though replacing the machine learning model with the causal model completely would be more causal inference recommendation.

References and further reading

[ Goldstein2013 ] Peeking Inside the Black Box: Visualising Statistical Learning with Plots of Individual Conditional Expectation, Goldstein et. al. arXiv

[ Lipton ] The Mythos of Model Interpretability, Z. Lipton arXiv

[ Molnar ] Interpretable ML book, C. Molnar url

[ Du2020 ] Techniques for Interpretable Machine Learning, Du et. al, doi

[ wang14 ] Falling Rule Lists, Wang-Rudin arXiv

Please note that even though counterfactuals are valuable in this context. Graphs are inherently more powerful in representing knowledge and causality can flourish on DAGs, plain counterfactuals over sets of variables are by construction less informative compare to DAGs.

ReplyDelete