Preamble

|

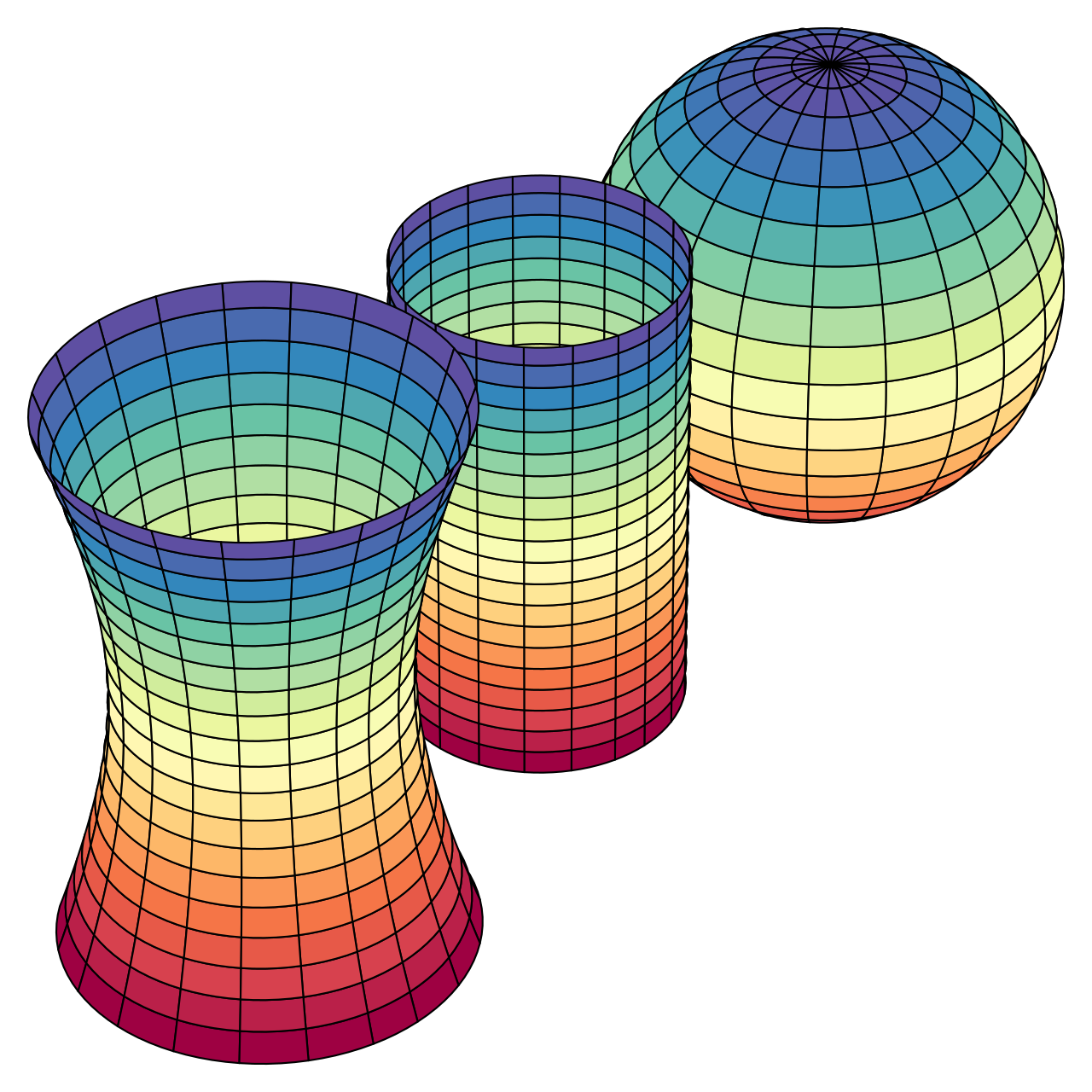

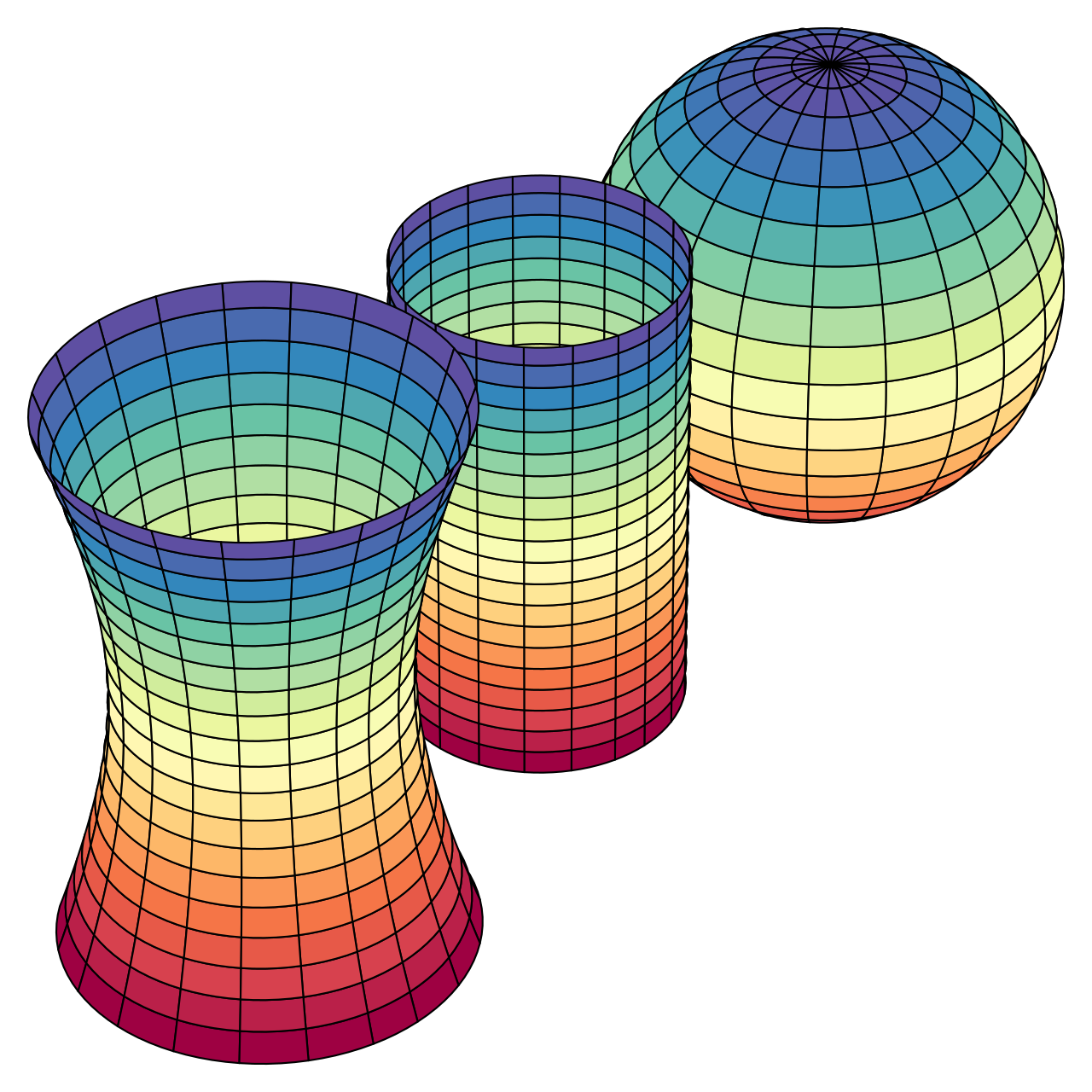

| Gaussian Curvature (Wikipedia) |

One of the core concepts in Physics is so called

metric tensor. This object encodes any kind of geometry. Combined genius of Gauß, Riemann and their contemporaries lead to such a great idea, probably one of the achievements of human quantitative enlightenment. However, due to notational aspects and lack of

obvious pedagogical introduction, making object elusive mathematically. Einstein's notation made this more accessible but still, it requires more explicit explanation. In this post we disassemble the definition of a distance over any geometry with an alternate notation.

Disassemble distance over a Riemannian Manifolds

The most general definition of a distance between two infinitesimal points on any geometry, or a fancy word for it, is a Riemannian Manifolds, is defined with the following definitions. Manifold is actually a sub-set of any geometry we are concerned with and Riemannian implies a generalisation.

Definition 1: (Points on a Manifold) Any two points are defined in general coordinates $X$ and $Y$, They are defined as row and column vectors respectively. In infinitesimal components. $X = [dx_{1}, dx_{2}, ..., dx_{n}]$ and $Y= [dx_{1}, dx_{2}, ..., dx_{m}]^{T}$.

Geometric description between two points are defined as tensor product, $\otimes$, that is to say we form a grid, on the geometry.

Definition 2: (A infinitesimal grid) A grid on a geometry formed by pairing up each point's components, i.e., a tensor product. This would be a matrix $P^{mn}$, (as in points), with the first row $(dx_{1} dx_{1},.....,dx_{1} dx_{n})$ and the last row $(dx_{m} dx_{1},.....,dx_{m} dx_{n})$.

Note that grid here is used as a pedagogical tool, points are actually leaves on the continuous manifold. Now, we want to compute the distance between grid-points, $dS^{n}$, then metric tensor come to a rescue

Definition 3: (Metric tensor) A metric tensor $G^{nm}$ describes a geometry of the manifold that connects the infinitesimal grid to distance, such that $dS^{n}=G^{nm} P^{mn}$.

Note that, these definition can be extended to higher-dimensions, as in coordinates are not 1D anymore. We omit the square-root on the distance, as that''s also a specific to L2 distance. Here, we can think of $dS^{n}$ a distance vector, and $G^{nm}$ and $P^{mn}$ are the matrices.

Exercise: Euclidian Metric

A familiar geometry, metric for Euclidian space, reads diagonal elements of all 1 and rest of the elements zero. How the above definitions holds, left as an exercise.

Conclusion

We have discuss that a metric tensor, contrary to its name, it isn't a metric per se but an object that describes a geometry, having magic ability to connecting grids to distances.

Further reading

There are a lot of excellent books out there but a classic Spivak's differential geometry is recommended.

Please cite as follows:

@misc{suezen24bumt,

title = {Basic understanding of a metric tensor: Disassemble the concept of a distance over Riemannian Manifolds},

howpublished = {\url{https://science-memo.blogspot.com/2024/04/metric-tensor-basic.html},

author = {Mehmet Süzen},

year = {2024}

}