Preamble

Progress in machine learning, specifically so-called deep learning, last decade was astonishingly successful in many areas from computer vision to natural language translation reaching automation close to human-level performance in narrow areas, so-called narrow artificial intelligence. At the same time, the scientific and academic communities also joined in applying deep learning in physics and in general physical sciences. If this is used as an assistance to known techniques, it is really good progress, such as drug discovery, accelerating molecular simulations or astrophysical discoveries to understand the universe. However, unfortunately, it is now almost standard claim that one supposedly could replace physical laws with deep learning models: we criticise these claims in general without naming any of our colleagues or works.

Circular reasoning: Usage of data produced by known physics

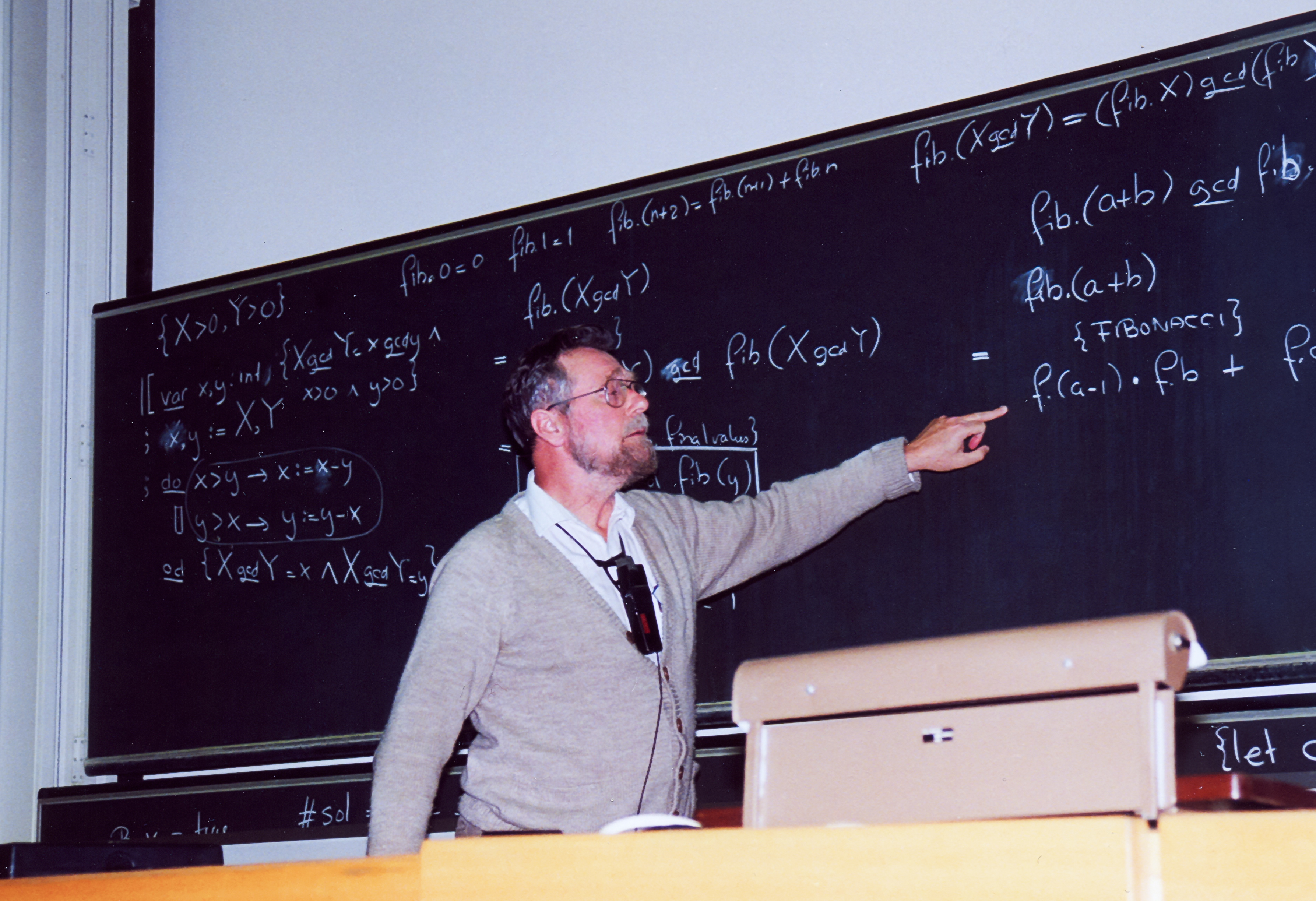

|

Blind monks examining an elephant

(Wikipedia) |

The primary fallacy on papers claiming to be able to produce a learning system that can actually produce physical laws or replace physics with a deep learning system lies in how these systems are trained. Regardless of how good they are in predictions, their primary ability is the product of already known laws. They would only replicate the laws provided within datasets that are generated by physical laws. Faulty generalisation: Computational acceleration in narrow application to replacing laws

One of the major faults in concluding that a machine-learned inference system doing better than the physical law is the faulty generalisation of computational acceleration in narrow application areas. This computational acceleration can not be generalised to all parameter space while systems are usually trained in certain restricted parameter space that physical laws generated data, for example solving N-body problems, or dynamics in any scale from action or Lagrangian and generating fundamental particle physics Lagrangians.

Benefits: Causality still requires scientist

The intention of this short article here aimed at showing limitations of using machine-learned inference systems in discovering scientific laws: there are of course benefits of leveraging machine learning and data science techniques in physical sciences, especially accelerating simulations in narrow specialised areas, automating tasks and assisting scientist in cumbersome validations, such as searching and translating in two domains, especially in medicine and astrophysics, for example sorting images of galaxy formations. However, the results would still need a skilled physicist or scientist to really understand and form a judgment for a scientific law or discovery, i.e., establishing causality.

Conclusion : No automated physicist or automated scientific discovery

Artificial general intelligence is not founded yet and has not been achieved. It is for the benefit of physical sciences that researchers do not claim that they found a deep learning system that can replace physical laws in supervised or semi-supervised settings rather concentrate on applications that benefit both theoretical and applied advancement in down to earth fashion. Similarly, funding agencies should be more reasonable and avoid funding such claims.

In summary, if datasets are produced by known physical laws or mathematical principles, the new deep learning system only replicates what was already known and it is not new knowledge, regardless of how these systems can predict or behave with new predictions. Caution is advised. We can not yet replace physicists with machine-learned inference systems, actually, not even radiologists are replaced, despite the impressive advancement in computer vision that produces super-human results.

@misc{suezen21fallacy,

title = {On the fallacy of replacing physical laws with machine-learned inference systems},

howpublished = {\url{http://science-memo.blogspot.com/2021/04/on-fallacy-of-replacing-physical-laws.html}},

author = {Mehmet Süzen},

year = {2021}

}

Postscripts

The following interpretations, reformulations are curated after initial post.

Postscript 1: Regarding Symbolic regression

There are now multiple claims that one could replace physics with symbolic regression. Yes, symbolic regression is quite a powerful method. However, using raw data produced by physical laws, so called simulation data from classical mechanics or modelling experimental data guided by functional forms provided by physics do not imply that one could replace physics or physical laws with machine learned system. We have not achieved Artificial General Intelligence (AGI) and symbolic regression is not AGI. Symbolic regression may not be even useful beyond verification tool for theory and numerical solutions of physical laws.

Postscript 2: Fallacy on the dimensionality reduction and distillation of physical laws with machine learning

There are now multiple claims that one could distill physical dynamical laws with dimensionality reduction. This is indeed a novel approach. However, the core dataset is generated by the coupled set of dynamical equations that is suppose to be reduced with fixed set of initial conditions. This does not imply any kind of distillation of set of original laws, i.e., the procedure can not be qualified as distilling set of equations to less number of equations or variates. It only provides an accelerated deployment of dynamical solvers under very specific conditions. This includes any renormalisation group dynamics.

Postscript 3: A new terms, Scientific Machine Learning Fallacy and s-PINNs.

Usage of symbolic regression with deep learning should be called symbolic physics informed neural networks (s-PINNs. Calling these approaches “machine scientist”, “automated scientist”, “physics laws generator” are technically a fallacy, i.e., Scientific Machine Learning Fallacy, primarily caught up in circular reasoning.

Postscript 4: AutoML is a misnomer : Scientific Machine Learning (SciML) Fallacy

SciML is immensely promising in providing accelerated deployment of known scientific workflows: specialised areas such as trajectory learning, novel operator solvers, astrophysical image processing, molecular dynamics and computational applied mathematics in general. Unfortunately, some recent papers continue on jumping into claims of automated scientific discovery and replacing known physical laws with supervised learning systems, including new NLP systems.

The primary fallacy on papers claiming to be able to produce a learning system that can actually produce physical/scientific laws or replace physics/science with a deep learning system lies in how these systems are trained. AutoML in this context actually doesn’t replace scientist but abstract out former workflows into different meta scientific work assisting scientists: hence a misnomer, MetaML is probably more suited terminology.