|

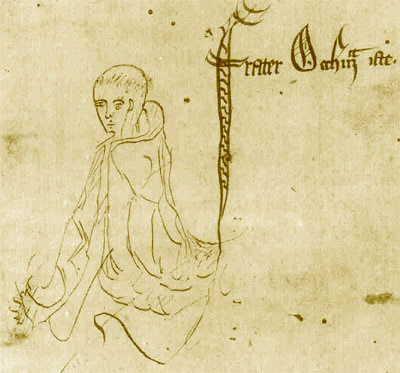

| Illustration of William of Ockham (Wikipedia) |

Why model test performance does not reflect in deployment? Understanding overfitting

Major contributing factor is due to inaccurate meme of overfitting which actually meant overtraining and connecting overtraining erroneously to generalisation solely. This was discussed earlier here as understanding overfitting. Overfitting is not about how good is the function approximation compared to other subsets of the dataset of the same “model” works. Hence, the hold-out method (test/train) of measuring performances does not provide sufficient and necessary conditions to judge model’s generalisation ability: with this approach we can not detect overfitting (in Occam’s razor sense) and as well the deployment performance.

How to mimic deployment performance?

This depends on the use case but the most promising approaches lies in adaptive analysis and detected distribution shifts and build models accordingly. However, the answer to this question is still an open research.

No comments:

Post a Comment